The pace of innovation in modern agile software development continues to accelerate.

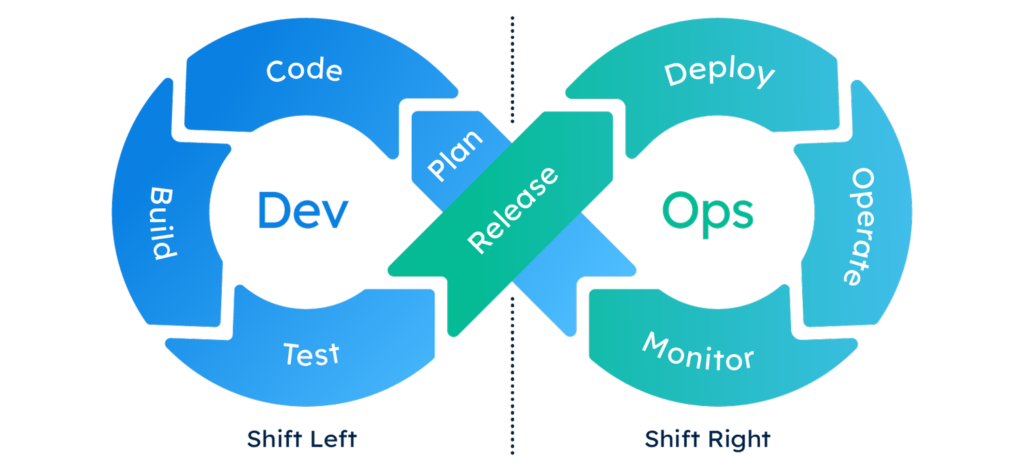

Agile practices have become the norm, with DevOps emerging as the next evolution to enable even faster delivery of high-quality software. Two key principles of DevOps are known as “shift left” and “shift right.”

Both involve moving certain tasks earlier or later in the development lifecycle, but what is the right balance between the two?

Shift Left in DevOps

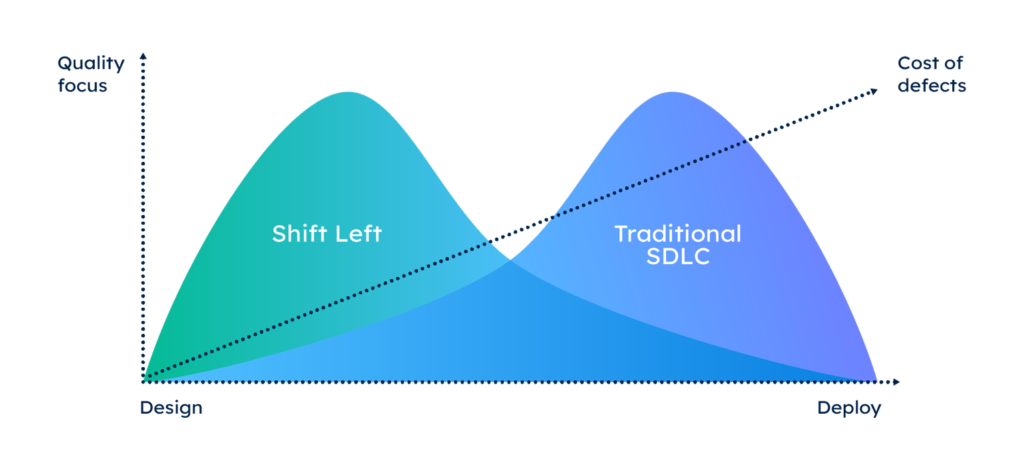

Shift left refers to moving tasks to earlier stages of the development lifecycle. The goal is to prevent defects from being introduced in the first place, rather than catching them later in testing. This enables faster feedback loops, so issues can be addressed when they are the least expensive to fix.

Some examples of shift left include:

- Automated testing – Running unit, integration, and other tests earlier in development to catch bugs early.

- Security scanning – Static analysis and other scans during code development to find vulnerabilities proactively.

- Code reviews – Peer reviews of code to improve quality before moving to testing.

- Continuous integration – Merging code frequently to detect integration issues quickly.

The main benefits of shift left are reduced rework, faster time to market, and higher quality. By addressing problems early, teams avoid the compounding costs of fixing issues later in the lifecycle, and developers get immediate feedback to improve code as they write it.

Shift Right in DevOps

Shift right refers to moving tasks later in the lifecycle. This reduces the complexity of the early stages, so developers can focus on writing code quickly without getting bogged down. Validation and troubleshooting are deferred to downstream automated processes.

Examples of shift right include:

- Infrastructure provisioning – Delaying resource configuration until deployment time using infrastructure as code.

- Testing automation – Shifting some lower-value manual testing to automated scripts running in production-like environments.

- Monitoring and observability – Relying on production telemetry data to surface issues instead of debugging locally.

- Progressive delivery – Releasing often in small increments to isolate and identify problems post-deployment.

The main benefits of shift right are increased speed and productivity. Eliminating unnecessary tasks early on allows developers to code faster without context switching. Shift right also reduces the need for rework by preventing over-engineering of functionality before it’s validated.

Striking the optimal balance

So which shift is better? The truth is that DevOps teams need both shift left and shift right to achieve an optimal balance. Too much of either extreme can be problematic:

All shift left can slow development velocity and stifle innovation due to excessive early processes.

All shift right may allow defects to go undetected until later when fixes are more difficult and time-consuming.

Here are some best practices for balancing shift left and shift right:

- Automate ruthlessly – Shifting tasks to either side requires automation to reduce manual overhead. Prioritize automating unit testing, infrastructure provisioning, deployment pipelines, and monitoring.

- Test earlier and often – Strike a balance between lean code-test cycles and sufficient automated testing to prevent downstream issues. Focus shift left testing on the riskiest areas.

- Right-size validations – Don’t over-engineer local fault injection, ancillary use cases, and advanced scenarios early on. Defer some validation for the production environment.

- Design for observability – Build in hooks for monitoring, logging, and tracing upfront. This allows finding issues via production telemetry vs. local debugging.

- Incrementally optimize flow – Bottlenecks naturally surface through small, frequent iterations. Address them just in time without over-optimizing prematurely.

- Adjust continuously – View shift left and right as dials to tune over time, not one-time destinations. Evolve policies as priorities and challenges change.

- Avoid extremes such as endless coding without reviews or over-architecting before writing any code. Mature DevOps teams land in the middle with the right dose of pre-emptive quality without impeding velocity.

Adopting a context-specific approach

Blanket mandates to universally shift left or right often backfire. The best balance depends heavily on context:

- Application – Critical software may warrant more shift left rigor. Experimental services can start further right.

- Domain – Highly regulated industries like healthcare and finance lean shift left for risk mitigation. Startups often shift right to move fast.

- Organization – Larger teams need more shift left to align work. Small groups can shift right more for speed.

- Maturity – New teams establish shift left foundations first. Mature teams automate more to safely shift right.

There is no one-size-fits-all. The blend depends on the app, domain, organization structure, and maturity level. The mix also evolves as capabilities improve.

The key is assessing where bloat or gaps exist, then tuning left or right accordingly. Are your team over-engineering or slowing down? Are you potentially accumulating technical debt? Afterward, dial the shifts to find harmony.

Cultural alignment

Beyond technical implementation, making shifts successful requires cultural alignment:

- Shared ownership – Everyone contributes to quality and velocity, not just developers and testers.

- Incentive alignment – Measure and reward behaviors that balance quality and speed, not just output.

- Empowered teams – Allow squads freedom to adjust the left/right mix within guardrails.

- Blameless culture – Avoid finger-pointing when issues inevitably occur. Focus on learning.

- Customer-centricity – Keep an outside-in perspective. Don’t over-optimize local efficiency at the customer’s expense.

- Continuous improvement – Make gradually enhancing left/right equilibrium a priority at all levels.

DevOps is as much about people and processes as it is about technology. Dialing in the optimal balance requires thoughtful adoption more than reactive trends.

Diffblue Cover helps enable the DevOps left/right balance

Achieving an optimal balance between shift left and shift right is critical for modern software teams. However, doing so often requires extensive test automation to enable fast feedback loops without compromising quality or velocity.

This is where solutions like Diffblue Cover come in. Cover is an AI-powered tool that autonomously writes unit test cases for Java code, generating complete test suites without manual intervention, allowing engineering teams to shift left on unit testing rigor without slowing down development.

By automating a traditionally time-intensive activity, Cover helps organizations move quality earlier into the development lifecycle while eliminating bottlenecks. Engineers gain confidence to deliver code quickly, knowing tests are handled. This frees them to focus on higher-value tasks.

In essence, Cover helps enable the shift left and shift right equilibrium that underpins DevOps success. To learn more about seamlessly incorporating AI-generated testing into your CI/CD pipelines, visit Diffblue or try Diffblue Cover today. The future of modern agile software development is here!